Adopting Go at Lunar Way

NOTICE: Originally posted on December 1st 2017. We were known as ‘Lunar Way’ until late 2019.

A previous blog post,”Lunar Way’s journey towards Cloud Native Utopia”, covered our motivation for building Cloud Native services, and highlighted how it helps us achieve velocity and autonomy in our feature squads.

This blog post will cover our experience of introducing the Go programming language to our backend.

Background

Lunar Way’s production backend is comprised of around 30 microservices deployed in a Kubernetes cluster. We are currently undergoing a “service explosion” in our pursuit of decomposing our monolithic Ruby on Rails service into several services. The majority of our services are written in TypeScript using the Node.js runtime (Node), and to simplify the integration with some partners we have taken advantage of code generation from WSDLs to Java.

Introducing a New Language

Before introducing a new language we needed to consider if the magnitude of such a task was worth the benefits. We use around 10 different internal npm packages in our Node applications, for common concerns and conventions such as log format, event communication, gRPC, database repos, and localisation. In addition, we use Swagger for specifying our services’ APIs. Our build and deployment process is handled by Jenkins (using Docker), and as a consequence the dependency management, build process, and test phase is needed for a new language.

Before settling on Go we had a good look at the language, followed what revolved around it, and tested it against a couple of concrete use cases during a hackathon in March 2017. We feared Go would be too low-level (pointers), or lack the appropriate libraries given the smaller community.

Due to differences between TypeScript and Go, some of the internal packages ended up looking different than we expected. The lack of generics led us to use code generation for our database “library”, instead of using database repositories with generics. Our event consumer/publisher package for Go is utilising go-routines and channels heavily, which has made it very performant.

At the time of writing, we have two non-user facing Go services in production and a handful in the pipeline.

Why Go?

Go caught our attention for several reasons, such as a powerful concurrency model, small runtime, performance, statically typed, and maintainability through simplicity. The fact that most components in our infrastructure are written in Go strengthened our interest in learning some of the internals. Better support for CPU bound work was one of the features we were looking for in a language to supplement Node due to its single threaded model. The creator of Node, Ryan Dahl, had an interesting comment on Node and Go on a podcast a couple of months ago:

“If you’re building a server, I can’t imagine using anything other than Go. That said, I think Node’s non-blocking paradigm worked out well for JavaScript, where you don’t have threads” - Ryan Dahl

Apart from the above, Go seems to have a lot of momentum, and is being described as the language of the cloud. Stack Overflow’s Developer Survey has had Go in the top 5 in the category “Most loved”, and in 2017 Go came in at #3 in the “Most wanted” category, its first appearance on this list.

Runtime

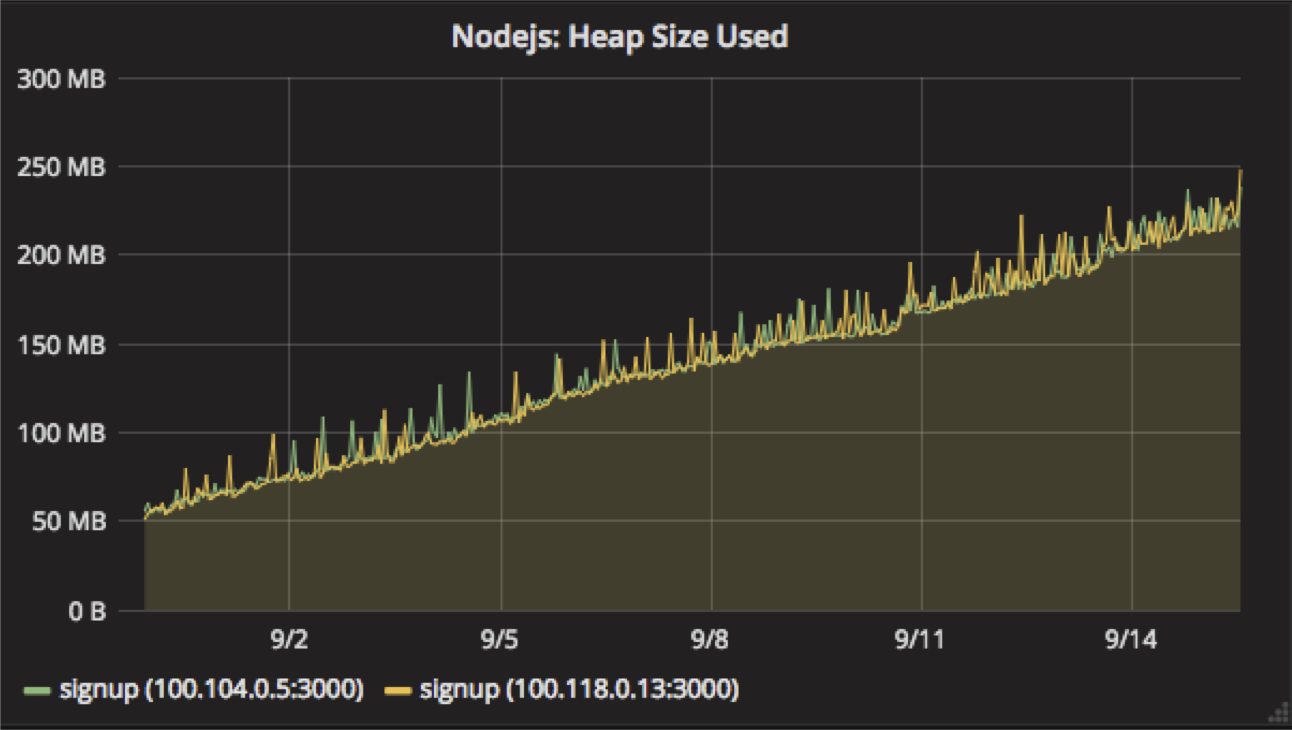

As the amount of services increase, the relevance of the runtime’s size increases. The figure below shows the minimum and maximum pod memory metrics from Kubernetes over the last week, grouped by runtime. So far we have seen 6–14 MB used in our Go services. To be fair, only event handling and gRPC are used so far in our Go services production. It is, however, pretty good compared to our smallest Node service, which is using 53 MB only handling events. From the measurements below it’s seen that around 71 Go services can run to one Java service, using the highest observed pod memory.

Go compiles to binaries, which we add to empty Docker scratch images, which results in image sizes ranging from 4 to 8 MB, where the largest images include packages for event handling, REST endpoints and gRPC endpoints. In addition to image size, we get the security benefit of leaving out several unnecessary dependencies compared to larger base images. The image sizes shown below aren’t only due to the language runtimes size, but also how well we construct the images. Go makes that easy by only requiring a binary.

CPU Bound Work

Another area where Go really shines is CPU bound work and concurrency. As we are strangling Ruby on Rails, we aren’t interested in Node for tasks like PDF generation. A previous example with image manipulation in Node showed us how CPU bound work blocked the event loop and caused long response times. This was solved by offloading the work to an AWS Lambda function to utilize Node’s async I/O.

Go’s concurrency model makes this a lot easier by utilising the simple concurrency primitives that are handled underneath, by the Go scheduler on top of threads.

Learning Curve

Go is a simple language that is easy to get started with, and the “Tour of Go” works very well. Structuring a project and understanding how the conventions around the GOPATH, workspaces, dependencies, and multiple repositories fit together initially caused a bit of hassle. A couple of concepts in Go differ from most other languages. State belongs in structs, while behaviour belongs in functions, instead of having both on a class for example. Interfaces are implicitly implemented instead of explicitly stated. Inheritance doesn’t exist, but embedding allows you to “borrow” pieces from other structs, or combine an interface of interfaces. Concepts such as channels and go-routines build on CSP and have some overlap with the actor model. These concepts were new to some of us, but they wrap the error-prone parts of concurrency in a simple, effective way.

The standard library provides a lot of what you need in high quality. The example below only uses the standard library. It shows how a custom struct can be written to a buffer, or a file, using the built in io.Writer interface. This example is inspired by the following blog post.

package main

import (

"bytes"

"encoding/json"

"fmt"

"io"

"os"

)

// User struct wraps the state and json mappings

type User struct {

ID string `json:"id"`

Name string `json:"name"`

Age int `json:"age"`

}

// Write method takes the io.Writer interface as input

func (p *User) Write(w io.Writer) {

b, _ := json.Marshal(*p)

// Writes to the io.Writer whether it is a buffer, file, or another implementation of io.Writer

// Error handling is left out of this example

w.Write(b)

}

func main() {

me := User{

ID: "828a4b25-daf9-4857-96de-4af40aa6da2e",

Name: "Martin",

Age: 28,

}

// The first io.Writer is a buffer

// The json marshalled user is written to the buffer and printed out

var b bytes.Buffer

me.Write(&b)

fmt.Printf("%s", b.String())

// The second io.Writer is a file

// Defer closes the file before leaving the current scope

// The file is saved using the same method as above

file, _ := os.Create("demo.json")

defer file.Close()

me.Write(file)

}

Handling JSON is less flexible than in TypeScript, but on the flip side you can trust your runtime that a variable is whatever you specified it to be.

The tooling around Go is, in my opinion, exceptional. Building, testing and running is blazingly fast, and every detail seems thoroughly thought out. Among my other favourite features are: table-testing, code formatter, documentation, race detector, and simple cross-compiling.

Dependencies

Dependency management is mentioned as one of the big challenges of Go. A working group is steadily working on an “official experiment” called dep, that we have been using with great success so far. When we started out the documentation was limited, but it has improved a lot. Dep may not be quite there yet, but it allows us to lock down our dependencies, among other things, which is a great start even though it’s still a bit slow. Coming from npm, we had an issue of a transitive dependency introducing a memory leak, even though all our level 1 dependencies were locked to a specific version. 😬

Conclusion

Overall, we have been very satisfied with Go. It fits well into our existing setup with its small runtime. The amount of services becomes less significant in terms of resource overhead, and allows for fast and frequent deployment of smaller pieces. The powerful concurrency model fills a gap that we were about to encounter, and Go gives us a lot of control without feeling too low-level. The lack of generics is still a bit annoying, but using code generation works better than expected, and maybe that’s the price of simplicity.

The simplicity of the language makes it explicit and aligns our code style internally, which (hopefully) will make it easier to get acquainted with a new service.

Introducing a new language takes time and effort, but it has been a very positive experience, and Go has definitely found its place at Lunar Way.

NOTICE: Originally posted on December 1st 2017. We were known as ‘Lunar Way’ until late 2019.