CloudNativeCon+KubeCon North America Recap

Lunar is a tech company at heart, and that requires us to stay up to date with new technologies that we can potentially take advantage of to improve the experience for our customers. This time, we are attending CloudNativeCon+KubeCon North America 2022 in Detroit - and I will take you with me on the sidelines with this blog post.

Me in the Keynote hall with room for approximately 7000 in-person attendees

Track chairing the Application + Development + Delivery track

CloudNativeCon+KubeCon North America in Detroit is a wrap. It’s been a busy, but very educational, fun, and overall great week. I’ve had the opportunity to meet old and new friends in the community.

I was selected as a program committee member and track chair in the track Application + Development + Delivery. This includes grading, providing constructive feedback, and ensuring diversity among speakers. As track chair we are reading the program committee’s comments and ratings, and further ensure that, companies are not overrepresented, there’s a diverse line-up, and overall great and relevant content for the co-chairs to do their final selections upon. Being a track chair also includes on-site in-person moderation of the scheduled sessions from the track. It’s been a lot of hard work, but also very rewarding to get a sneak peek into what the community is working on and thinks is worthy of sharing with the rest of the community.

All of this also benefits Lunar and the work that I’m hired to do, namely ensuring that we are moving in the right direction from a technical and architectural standpoint. All of these learnings and experiences are being taken into consideration whenever we need to evaluate new technology, ways of working, etc.

Sharing our experiences with linkerd at ServiceMeshCon

Besides being part of the conference as program committee member and track chair, I further gave a talk at the co-located event ServiceMeshCon North America. My talk focused on our adoption of service mesh at Lunar, more specifically our adoption of Linkerd. Why do we see value in adding this technology to our stack? What kind of features does it provide? And how did we choose to implement it?

It was a great pleasure to share our experiences and the talk was well received, with good questions and discussions afterwards. The talk was recorded and will be available on YouTube.

Me speaking at ServiceMeshCon, a co-located CloudNativeCon+KubeCon conference

Photo credits: Cloud Native Computing Foundation

Trendspotting - what should we be looking for?

CloudNativeCon+KubeCon is a big conference with over 7000 attendees in person, and 15000+ attendees when counting virtual attendees too. This of course also means that the content of this section will be a bit biased toward the talks and people that I have spoken with during my week in Detroit. Ricardo Rocha, CloudNativeCon+KubeCon Co-chair and Computing Engineer at CERN, also pointed this out in his Thursday keynote - we all have our own custom swiss knife of what cloud native is to us.

Ricardo Rocha in his keynote “A Cloud Native Swiss Knife”

But overall, the following topics are probably the topics that I have been discussing the most:

DevX - especially Backstage is taking off now with its own conference.

Crossplane - building abstractions, should we build providers or operators?

Service Mesh - continuous discussion on the topic of sidecarless

GitOps - massive adoption!

Community - how are we getting on in a post-pandemic world?

Cluster API - multi-cluster management

Let’s go through the topics one by one, cover the trends within each topic, and discuss what we do at Lunar.

DevX

Backstage, a developer portal built by Spotify and later donated to the CNCF, is really taking off. This was visible with a dedicated co-located conference, BackstageCon, on Monday. 1.500+ contributors and over 400 companies have now officially contributed to and adopted backstage. Lunar is part of these numbers as we are both an adopter and have made several contributions to the project.

Backstage Open Source statistics

During the conference, Spotify announced what they call Spotify Plugins subscription, a paid bundle of plugins. I tried to gather a bit more insight into this move and spoke with someone from Spotify at the Backstage project pavilion, who voiced that Spotify is investing heavily in Backstage and the open-source community, but needs a way to sustain the cost.

To be honest, I think this is a bit of an interesting move - whether good or bad, I haven’t really figured out yet. Monetization of plugins could make sense and Spotify is probably looking for a way to sustain its engagement in the open-source community. However, this could end up in a situation where more companies see value in providing a paid offering, which may hurt the open-source development of plugins.

Another player in this field is Roadie, who provides a Backstage as a Service platform and are also building plugins that will not be available to the wider community. But they chose a different model, where they package Backstage and provide it as a service. It’s certainly an interesting development, I just hope it will not lead to unhealthy rivalries and slow down the great progress of the project.

If you are interested in reading more about the recent announcement, see this blog post from Spotify.

Besides Spotify launching paid plugins, BackstageCon had really good content: both end-user adoption stories, in addition to specific technical topics such as the rewrite of the backend system that powers Backstage. If you are interested in exploring the content of BackstageCon, recordings are already available and can be found here.

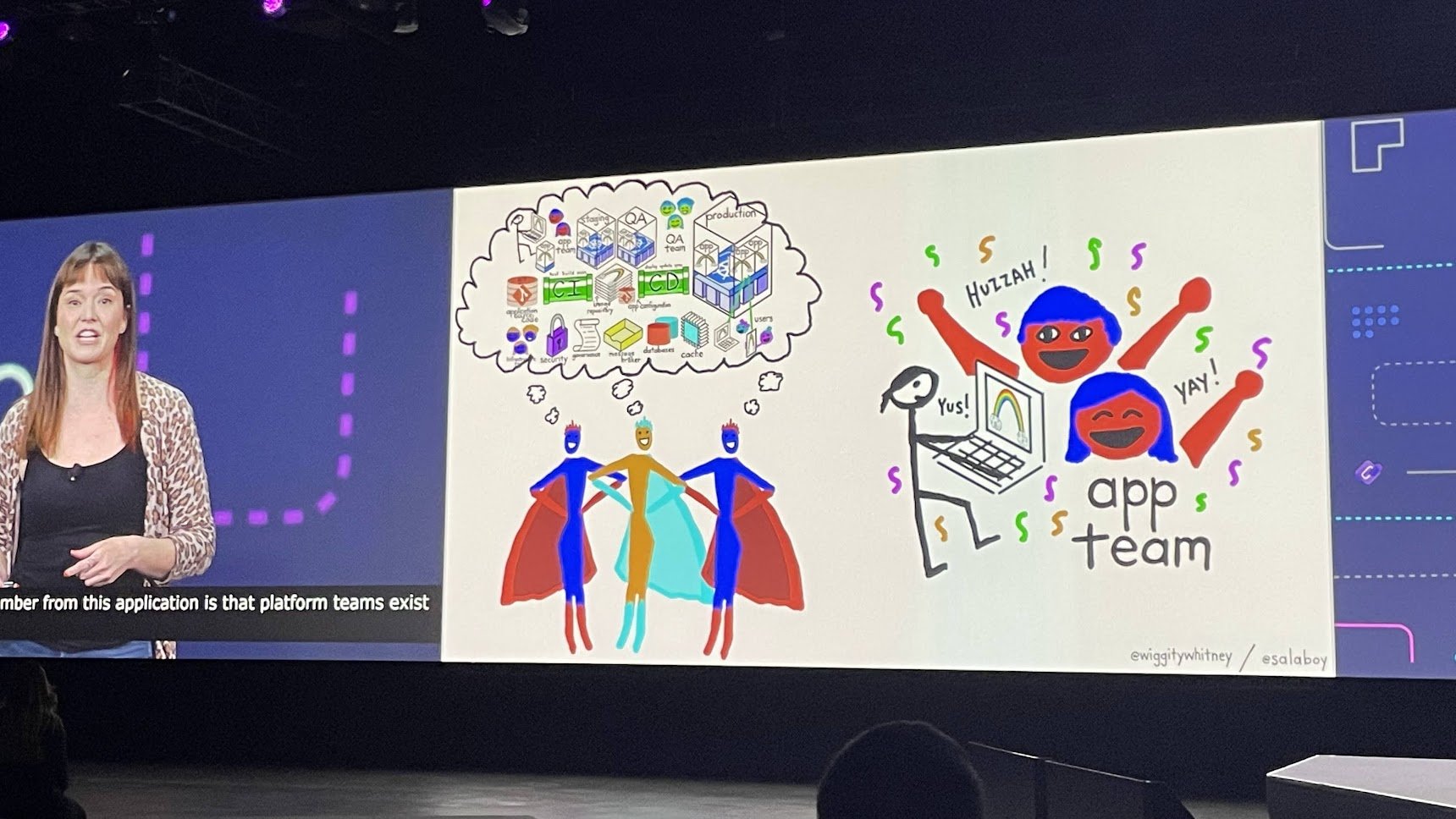

Besides BackstageCon, Developer Experience was also a big topic at the general conference. I will highlight Thursday morning's keynote by Mauricio Salatino, Staff Engineer at VMware, and Whitney Lee, Staff Technical Advocate, which first of all contained great energy and slides, but also a really nice overview of how and why platform teams are important.

Mauricio Salatino and Whitney Lee on the value of Platform teams

Another DevX-related talk was my good friend Jessica Andersson, Product Area Lead Engineering Enablement at Kognic, who shared her experiences in running a platform team - and focused on the return on investment and business value of the improvements that the team helps developers with.

Jessica Andersson on “Why we should care about Developer Experience”

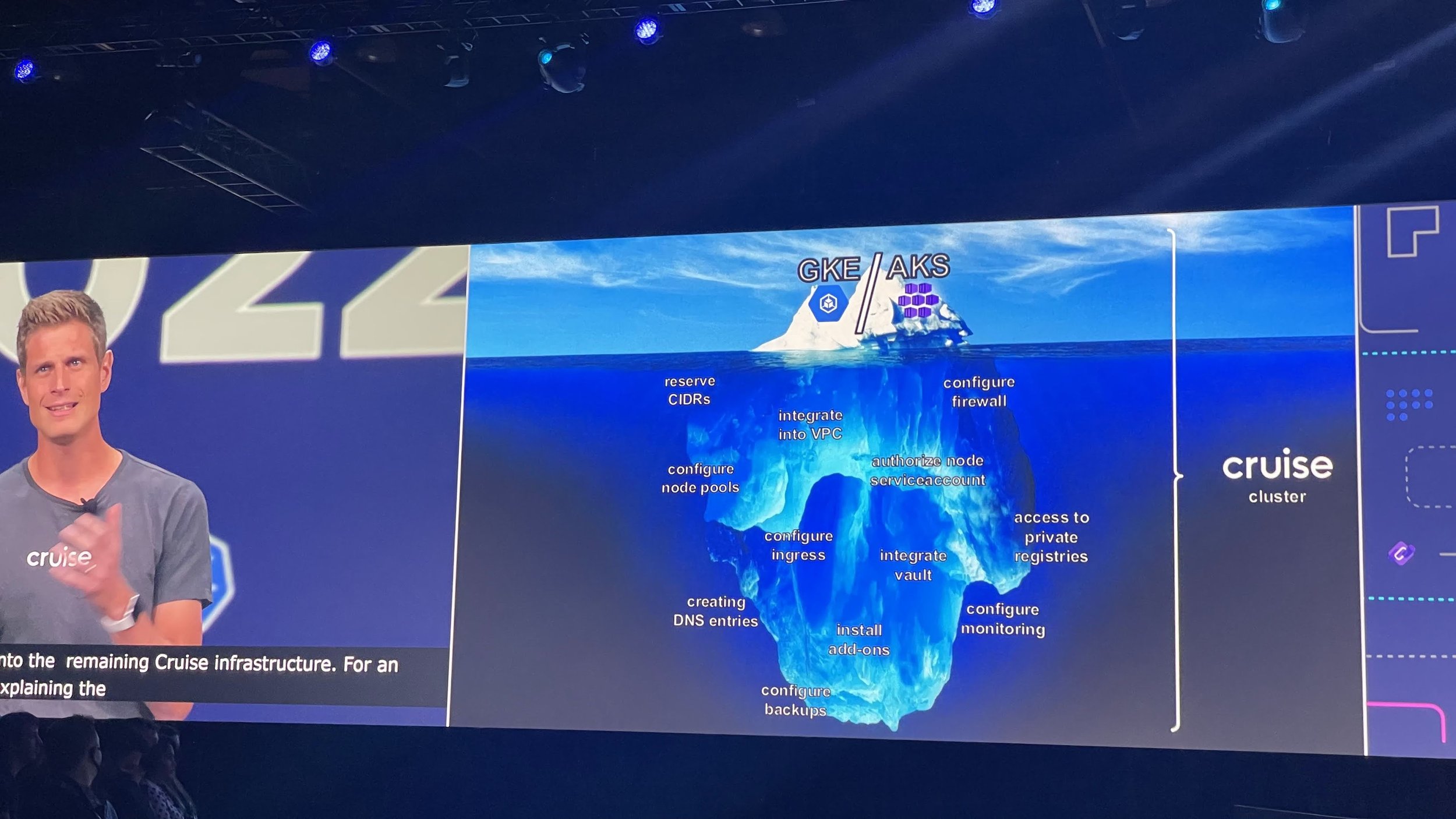

Last talk I would like to highlight is the keynote by Cruise. They also focused on the role that platform engineering teams have - and really highlighted the fact that Kubernetes (managed or not) is not the end goal, it’s really a platform for building platforms, namely Internal Developer Platforms.

Jonny Langefeld, Staff Software Engineer, Cruise on GKE/AKS only manages the tip of the iceberg.

DevX - or Developer Experience - is a topic that is very close to our heart at Lunar. We have had a big focus on this topic since we embarked on our Cloud Native journey. We learned the hard way that shifting operational responsibility to our developers without any support didn’t work, and it took a lot of focus away from actual feature development. That’s why we back in 2017 began building abstractions and providing a self-service platform to our developers. With the creation of shuttle (our first open source project) in 2018, we took this to a new level, as shuttle gave us a platform to move a lot of the operational configuration and management to a central place provided as a self-service platform for developers to consume. As time has passed, more and more functionality have been provided centrally from the platform squad, like compliance checks, security checks, best practices, etc.

The most recent expansion in this area has been our adoption of Backstage. Backstage allows our platform organization to stitch together all the different technologies that we internally use at Lunar and provide a single interface for developers to consume. We are essentially building what in the community is referred to as an Internal Developer Platform (IdP). Backstage further provides us with a catalog for all our software assets that are dynamically updated, making it easier to adhere to the requirements set by the Danish FSA.

Crossplane - abstractions, abstractions…

Crossplane was once again a hot topic - I actually didn’t attend a single talk about the topic of Crossplane, but nevertheless, it was a frequent topic when talking to people. For those not familiar with Crossplane, it’s a project that focuses on the declarative configuration of basically anything that provides an API. Crossplane further allows engineers to build and expose abstractions, or composite resources based on lower-level resources and a set of providers. An example of this could be the AWS provider that maps AWS API’s and exposes those as resources in k8s. A platform engineer can then create an abstraction, e.g. a database, that is composed of multiple different resources, such as the instance, security group, parameter group, IAM user, etc.

At Lunar we have experimented a bit with Crossplane, as we are interested in providing cloud infrastructure resources as Kubernetes custom resources, and build abstractions on this. We have already set up a PoC where Crossplane is in charge of creating an S3 Bucket for a service. This composition contains more than just the Bucket; it also configures policy and access roles, and provides the needed configuration back to the service automatically and immediately after creation. Having Crossplane in the mix of technologies will allow us to remove some of the friction from developers that need cloud resources, and automate the process around obtaining credentials for these resources as well. The current workflow forces developers to understand a different technology decoupled from their daily workflow, which is not ideal.

Service Mesh - sidecarless or not?

The big topic within the service mesh space continues to whether or not to use sidecar proxies. Istio recently launched Ambient mesh that removes the sidecar proxy by pushing some of the concerns further down, similar to Cilium service mesh that pushes for this to the kernel using eBPF. However, proxies cannot be removed fully, as Layer 7 features still need a proxy, but instead of deploying them as sidecars, they are deployed as DaemonSets.

The big question is whether or not we are there yet? I really think the answer is: it depends. The technology is definitely maturing, however, the sidecar model still has its place due to its simplicity. I also gave an interview on this topic for TechTarget. In our situation at Lunar, I don’t think it makes sense to adopt eBPF based service meshes, at least not yet. What I really like about our current solution with Linkerd is simplicity. The sidecar model is “simple” - we know how containers work, and we know how Kubernetes handles the lifecycle of pods and containers, making it a well-known territory to operate, which in turn minimizes risk and ensures a fast Mean Time to Recovery (MTTR). Sidecarless service mesh has a lot more moving parts, some of which happen in kernel space, and some in user space, increasing the complexity and operational knowledge required to run such a mesh.

There was a great panel at ServiceMeshCon with representatives from the different projects discussing amongst others this question. I highly recommend watching it on YouTube once it’s published.

Service Mesh Panel at ServiceMeshCon

At Lunar we have now been using Linkerd for a while. This was also the topic that I covered in my talk at the co-located conference at the beginning of the week. Service Mesh provides Lunar with a bunch of great features, among others:

Mutual TLS (mTLS) internally in our environments

gRPC Load balancing - Linkerd provides proper load balancing between pod replicas and thereby ensures higher availability.

Service Profiles - resiliency mechanisms in the infrastructure layer

Policies - ensure who can talk to who

Multi-cluster communication to ensure a secure communication path between clusters in different cloud providers.

GitOps - declarative, declarative, declarative

I didn’t really attend any specific GitOps-focused talks, but that wasn’t necessary either in order to understand that GitOps is the defacto way to deliver services to a production kubernetes clusters, either by using Argo CD or Flux (as we use internally at Lunar).

At Lunar we have been an early adopter of this pattern as we already in 2019 adopted this internally. GitOps allows us to store the source of truth of our environments in a git repository. This repository acts as a gate between our environments and our developers. All changes in an environment goes through this repository, which means that all changes are recorded in a log, and can be retrieved for audit purposes. Storing the source of truth centrally further allows us to restore our environments based on the data stored in these repositories, enabling the capability of during failover of clusters and preparing for failure.

Seeing that this is now more or less a de facto standard, is a great validation of the method that we chose.

Community

Cloud Native and Kubernetes are all about the people and the community around the projects. This community still to this day amazes me with its kindness and openness. Cloud Native technology is built in the open, by the community, for the community, and everybody is encouraged to contribute. Contributions can be anything from code, commenting on issues, sharing experiences, fixing docs etc.

At Lunar we are big consumers of open source, and we favor open source over proprietary technologies. We utilize quite a few open source projects both in the services that we build but also in the infrastructure that is supporting our environments. We are also trying to contribute back as much as possible, either with feedback to projects, fixes, ideas, or sharing our experiences with the usage of a particular project. We have also developed a few projects ourselves that we have open sourced and shared with the community.

In addition, we have a good handful of people who enjoy sharing experiences at conferences and meetups. Last, but not least, we are also engaging with the local communities as organizers of meetups and conferences, that further sustain our brand as a technology company.

Cluster API

Cluster API is gaining more and more attraction, and many companies are actively implementing or experimenting with Cluster API in order to get to a point of declarative and reconciled cluster management.

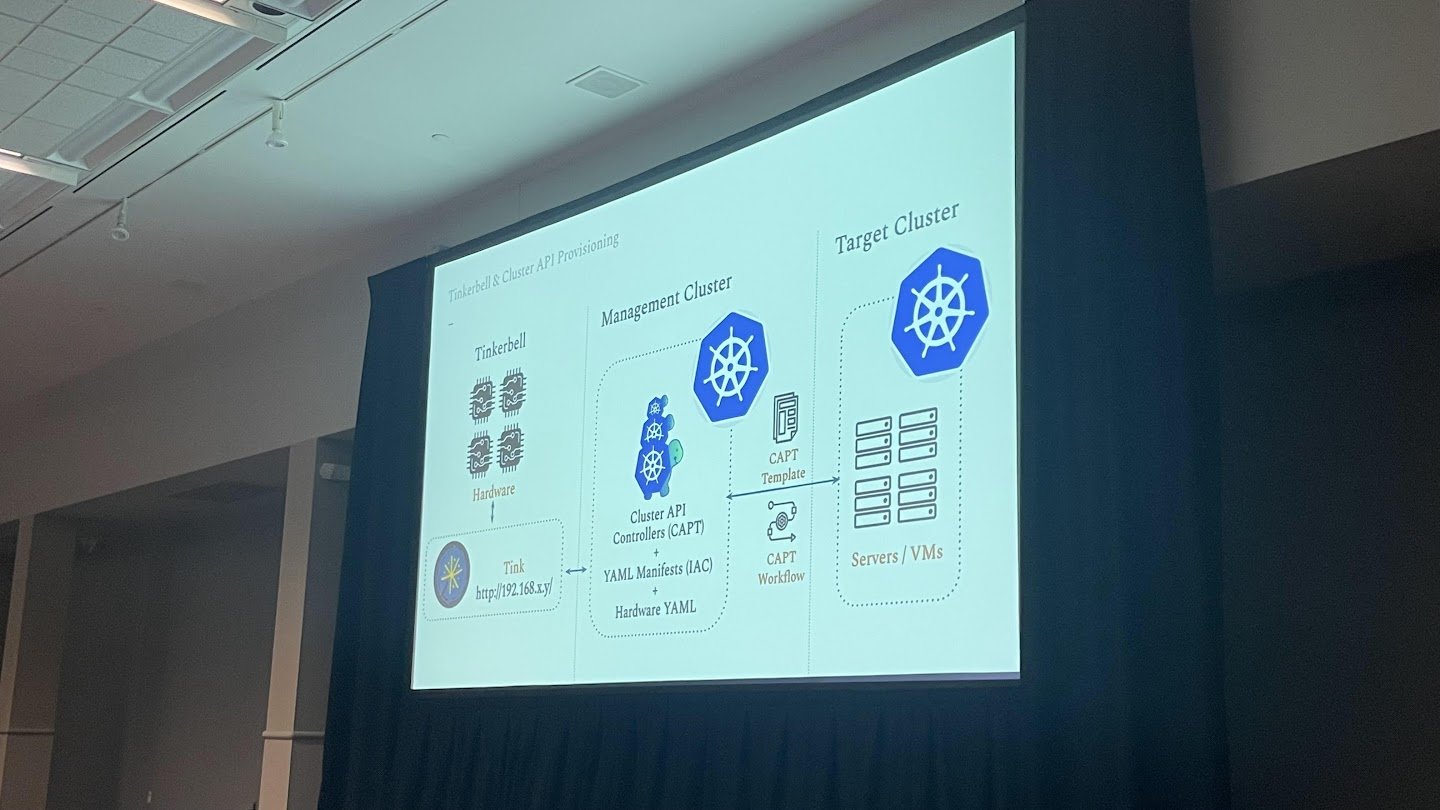

I attended a talk by Katie Gamanji, Sr. Engineer at Apple, on the topic of bare-metal clusters with Tinkerbell and Cluster API. Tinkerbell is a way to standardize infrastructure and application management using the same API-centric, declarative configuration and automation approach pioneered by Kubernetes. Many, especially, companies in regulated industries are looking at Tinkerbell and Cluster API to manage bare-metal clusters, whether these clusters are placed in the cloud or on-premise.

Cluster API with Tinkerbell by Katie Gamanji, Sr. Engineer at Apple

We are actively working hard on Cluster API internally at Lunar. We have been using Kubernetes internally at Lunar since early 2017. Kubernetes provides us with a highly scalable and cloud-agnostic runtime for our microservice architecture. The way we have been managing Kubernetes up until this point has some flaws, as the workflow is not optimized. Once implemented we will be able to apply the exact workflow for kubernetes configuration changes as we do for changes to application code.

This is just one of the things that Cluster API helps us with, among others it will help us align our kubernetes cluster configurations across cloud providers. One issue we have seen lately with our usage of cloud provider managed solutions is that we get a different flavor depending on which cloud we will be operating the cluster on. This requires our platform engineers to have a broader knowledge of technologies, which is not good. In case of incidents, this will definitely result in slower MTTR and in general increase risk.

Hanging out with Phippy and Friends. Phippy and Friends are characters in Children's Illustrated Guide to Kubernetes.

Wrapping up

All in all, it has been a great experience and a good validation that the principles we build our platform on, still are valid. I look very much forward to continuing the discussions and following the community for the european addition in Amsterdam in April next year.

If you are interested in reading more takeaways, I live-tweeted most of the sessions I attended. Check out my Twitter feed.

Big shout-out to Nina Jensen and Bjørn Sørensen for providing great feedback on this post.